Weighing the Benefits and Risks of AI Autopilots

October 25, 2024

By Sekhar Sarukkai – Cybersecurity@UC Berkeley

In the previous blog, we explored the security challenges associated with AI Copilots, systems that assist with tasks and decisions but still rely on human input. We discussed risks like data poisoning, misuse of permissions, and rogue AI Copilots. As AI systems advance with the emergence of AI agentic frameworks such as LangGraph and AutoGen, the potential for security risks increases—especially with AI Autopilots, the next layer of AI development.

In this final blog of our series, we’ll focus on Layer 3: AI Autopilots— autonomous agentic systems that can perform tasks with little or no human intervention. While they offer tremendous potential for task automation and operational efficiency, AI Autopilots also introduce significant security risks that organizations must address to ensure safe deployment.

Benefits and Risks of AI Autopilots

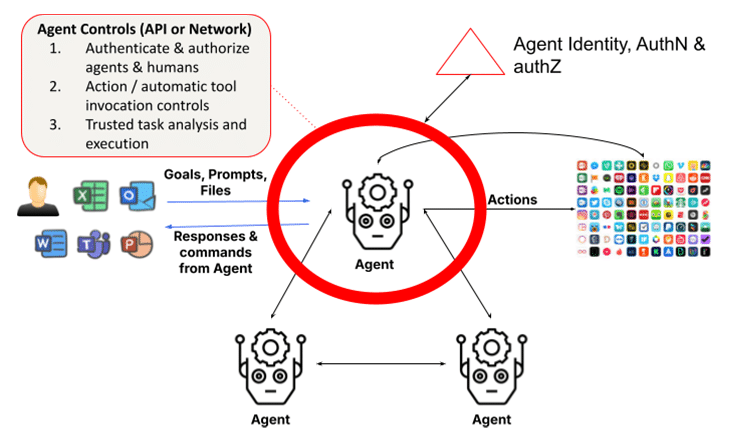

Agentic systems build on large language models (LLMs) and retrieval-augmented generation (RAGs). They add the ability to take action via introspection, task analysis, function calling, and leveraging other agents or humans to complete their tasks. This requires agents to use a framework to identify and validate agent and human identities as well as to ensure that the actions and results are trustworthy. The simple view of an LLM interacting with a human in Layer 1 is replaced by a collection of dynamically formed groups of agents that work together to complete a task, increasing the security concerns multi-fold. In fact, the most recent release of Claude from Anthropic is a feature that allows AI to use computers on your behalf, enabling AI to use tools needed to complete a task autonomously – a blessing to users and a challenge to security folks.

1. Rogue or Adversarial Autonomous Actions

AI Autopilots are capable of executing tasks independently based on predefined objectives. However, this autonomy opens up the risk of rogue actions, where an autopilot might deviate from intended behavior due to programming flaws or adversarial manipulation. Rogue AI systems could cause unintended or harmful outcomes, ranging from data breaches to operational failures.

For example, an AI Autopilot managing critical infrastructure systems might accidentally shut down power grids or disable essential functions due to misinterpreted input data or a programming oversight. Once set in motion, these rogue actions could be difficult to stop without immediate intervention.

Adversarial attacks pose a serious threat to AI Autopilots, particularly in industries where autonomous decisions can have critical consequences. Attackers can subtly manipulate input data or the environment to trick AI models into making incorrect decisions. These adversarial attacks are often designed to go undetected, exploiting vulnerabilities in the AI system’s decision-making process.

For example, an autonomous drone could be manipulated into changing its flight path by attackers subtly altering the environment (example: placing objects in the drone’s path that disrupt its sensors). Similarly, autonomous vehicles might be tricked into stopping or veering off course due to small, imperceptible changes to road signs or markings.

Mitigation Tip: Implement real-time monitoring and behavioral analysis to detect any deviations from expected AI behavior. Fail-safe mechanisms should be established to immediately stop autonomous systems if they begin executing unauthorized actions. To defend against adversarial attacks, organizations should implement robust input validation techniques and frequent testing of AI models. Adversarial training, where AI models are trained to recognize and resist manipulative inputs, is essential to ensuring that AI Autopilots can withstand these threats.

2. Lack of Transparency and Ethical Risks

With AI Autopilots operating without direct human oversight, issues of accountability become more complex. If an autonomous system makes a poor decision that results in financial loss, operational disruption, or legal complications, determining responsibility can be difficult. This lack of clear accountability raises significant ethical questions, particularly in industries where safety and fairness are paramount.

Ethical risks also arise when these systems prioritize efficiency over fairness or safety, potentially leading to discriminatory outcomes or decisions that conflict with organizational values. For instance, an AI Autopilot in a hiring system might inadvertently prioritize cost-saving measures over diversity, resulting in biased hiring practices.

Mitigation Tip: Establish accountability frameworks and ethical oversight boards to ensure AI Autopilots align with organizational values. Regular audits and ethical reviews should be conducted to monitor AI decision-making, and clear accountability structures should be established to handle potential legal issues that arise from autonomous actions.

3. Agent Identity, Authentication and Authorization

A fundamental issue with multi-agent systems is the need to authenticate the identities of agents and authorize client agent requests. This can pose a challenge if agents can masquerade as other agents or if requesting agent identities cannot be strongly verified. In a future world where escalated-privilege agents communicate with each other and complete tasks, the damage incurred can be instantaneous and hard to detect unless fine-grained authorization controls are strictly enforced.

As specialized agents proliferate and collaborate with each other, it becomes increasingly important for authoritative validation of agent identities and their credentials to ensure no rogue agent infiltration in enterprises. Similarly, permissioning schemes need to account for agent classification-, role- and function-based automated use for task completion.

Mitigation Tip: To defend against adversarial attacks, organizations should implement robust input validation techniques and frequent testing of AI models. Adversarial training, where AI models are trained to recognize and resist manipulative inputs, is essential to ensuring that AI Autopilots can withstand these threats.

4. Over-Reliance on Autonomy

As organizations increasingly adopt AI Autopilots, there is a growing risk of over-reliance on automation. This happens when critical decisions are left entirely to autonomous systems without human oversight. While AI Autopilots are designed to handle routine tasks, removing human input from critical decisions can lead to operational blind spots and undetected errors. This is manifested via automatic tool invocation action taken by agents. This is an issue since, in many cases, these agents have elevated privileges to perform these actions. And this is an even bigger issue when agents are autonomous where prompt injection hacking can be used to force nefarious actions without user knowledge. In addition, in multi-agent systems the confused deputy problem is an issue with actions that can stealthily escalate privilege.

Over-reliance can become especially dangerous in fast-paced environments where real-time human judgment is still required. For example, an AI Autopilot managing cybersecurity could miss the nuances of a rapidly evolving threat, relying on its programmed responses instead of adjusting to unexpected changes.

Mitigation Tip: Human-in-the-loop (HITL) systems should be maintained to ensure that human operators retain control over critical decisions. This hybrid approach allows AI Autopilots to handle routine tasks while humans oversee and validate major decisions. Organizations should regularly evaluate when and where human intervention is necessary to prevent over-reliance on AI systems.

5. Human Legal Identity And Trust

AI Autopilots operate based on predefined objectives and in cooperation with humans. However, this cooperation also requires agents to validate the human entities with whom they are collaborating, as these interactions are not always with authenticated persons using a prompting tool. Consider the deepfake scam, where a finance worker in Hong Kong paid out $25 million by assuming that a deepfake version of the CFO in a web meeting was indeed the real CFO. This highlights the increasing risk of agents that can impersonate humans, especially since impersonating humans becomes easier with the latest multi-modal models. OpenAI recently warned that a 15-second voice sample would be enough to impersonate the voice of a human. Deepfake videos are not far behind, as illustrated by the Hong Kong case.

Additionally, in certain cases, delegated secret-sharing between humans and agents is essential to complete a task, for example, through a wallet (for a personal agent). In the enterprise context, a financial agent may need to validate the legal identity of humans and their relationships. There is no standardized way for agents to do so today. Without this, agents will not be able to collaborate with humans in a world where humans will increasingly be the Copilots.

This issue becomes particularly dangerous when AI Autopilots inadvertently make decisions based on bad actors impersonating a human collaborator. Without a clear way to digitally authenticate humans, agents are susceptible to acting in ways that conflict with broader business goals, such as safety, compliance, or ethical considerations.

Mitigation Tip: Regular reviews of the user and agent identities involved in AI Autopilots task executions are essential. Organizations should use adaptive algorithms and real-time feedback mechanisms to ensure that AI systems remain aligned with changing users and regulatory requirements. By adjusting objectives as needed, businesses can prevent misaligned goals from leading to unintended consequences.

Securing AI Autopilots: Best Practices

In addition to the security controls discussed in the previous two layers that include LLM protection for LLMs and data controls for Copilots, the agentic layer necessitates the introduction of an expanded role for identity and access management (IAM), as well as trusted task execution.

To mitigate the risks of AI Autopilots, organizations should adopt a comprehensive security strategy. This includes:

- Continuous monitoring: Implement real-time behavioral analysis to detect anomalies and unauthorized actions.

- Ethical governance: Establish ethics boards and accountability frameworks to ensure AI systems align with organizational values and legal requirements.

- Adversarial defense: Use adversarial training and robust input validation to prevent manipulation.

- Human oversight: Maintain HITL systems to retain oversight of critical decisions made by AI.

By implementing these best practices, organizations can ensure that AI Autopilots operate securely and in alignment with their business goals.

The Path Forward: Securing Autonomous AI

AI Autopilots promise to revolutionize industries by automating complex tasks, but they also introduce significant security risks. From rogue actions to adversarial manipulation, organizations must stay vigilant in managing these risks. As AI continues to evolve, it’s crucial to prioritize security at every stage to ensure these systems operate safely and in alignment with organizational goals.

To learn more about securing your AI applications: Read our Solution Brief

Other Blogs In This Series: