Features & Benefits [Beginner’s Guide]

What is Amazon S3? S3 stands for “Simple Storage Service” and it is a highly scalable, reliable and cost-effective cloud storage service provided by Amazon Web Services (AWS). It offers object storage designed to store and retrieve any amount of data anywhere on the web.

S3’s primary features include high durability, data availability and security, as well as virtually unlimited scalability. It enables users to store and protect any file type, from websites and mobile applications to data analytics and IoT devices.

S3 stores data as objects within buckets, which are similar to folders. Each object consists of a file and metadata. Users can access and manage objects in the management console, command line interface or AWS software development kit (SDK). S3 automatically copies data from multiple locations in an AWS region to prevent data loss and downtime during a disaster.

Amazon S3 has several use cases, including backup and archiving, disaster recovery, web hosting, big data, data lakes, IoT, and storing and serving static assets like images and videos. It integrates easily with other AWS services, which helps developers build scalable, secure and highly available applications.

S3 follows a straightforward, pay-as-you-go pricing model based on storage usage, requests, data transfer and additional features like data transfer acceleration and cross-region replication. It also allows users to choose from multiple storage classes, optimizing costs based on their data access needs.

What Is Amazon S3?

Amazon S3 is AWS’s object storage service that allows you to store and retrieve data from anywhere regardless of size or type. Amazon S3 was released in 2006and has since become a widely adopted and reliable storage solution for various use cases due to its scalability, durability, security and cost-effectiveness.

It’s often used for backing up and archiving data because it is reliable and has tools to manage data over time. S3 can be used to host static websites by serving content such as HTML, CSS, JavaScript, images and videos. It also functions as a centralized data lake for storing and analyzing large amounts of structured and unstructured data.

What Are the Main Features of Amazon S3?

The features of Amazon S3 include storage analytics and insights, storage management and monitoring, storage classes, access management and security, data processing, query in place, data transfer, data exchange and performance.

How Does Amazon S3 Work?

Amazon S3 is a component of Amazon Web Services that is designed for data storage. It organizes data as “objects” within containers called “buckets.” Each object, which can be any type of file up to 5 terabytes in size, is stored with descriptive metadata and a unique identifier, or key.

S3 can automatically manage both large and small amounts of data, making data handling easier. The service is engineered to provide exceptional data availability and durability, ensuring that data is accessible at all times and well protected against potential loss.

How to Access and Use Amazon S3

You can access and use Amazon S3 through different methods, such as the AWS management console, the AWS command line interface (CLI), AWS software development kits (SDKs) and the Amazon S3 REST API. Each of these methods suits different needs.

- AWS management console: The console is a user-friendly graphical interface that allows you to manage your Amazon S3 resources. You can create buckets, upload and manage objects, set permissions and configure other settings without writing any code.

- AWS command line interface: For those who prefer script-based management, the AWS CLI is a powerful tool. You can perform virtually all the operations the console can handle via command line instructions. After installing the CLI, you can execute commands to manage S3 buckets and objects directly from your terminal or script.

- AWS SDKs: AWS has SDKs for programming languages like Python, Java and JavaScript. They let developers integrate S3 functionality directly into their apps. With an SDK, you can perform tasks like uploading files, fetching objects and managing permissions programmatically, which facilitates integration with other apps and automated workflows.

- Amazon S3 REST API: The Amazon S3 REST API provides programmatic access to Amazon S3 via HTTP. This method is ideal for developers who need to directly interact with S3 services within their applications or for integrating S3 with other RESTful services. It allows detailed control over S3 operations such as creating buckets, uploading objects and managing access permissions.

How to Create S3 Buckets

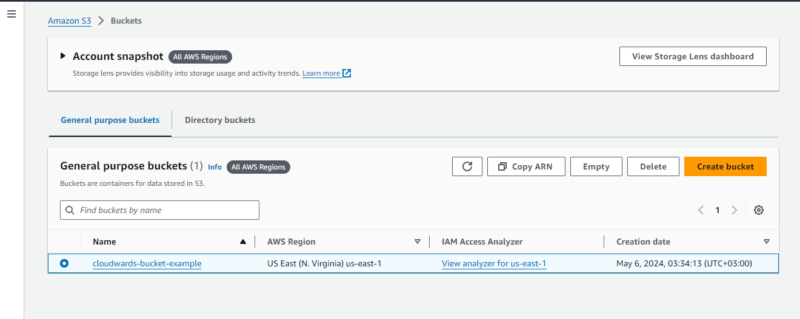

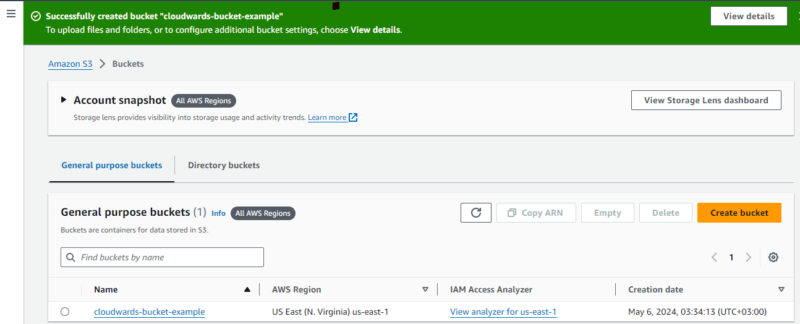

Creating an S3 bucket in Amazon Web Services involves a few simple steps. First, you log in to the AWS console and navigate to Amazon S3. From there, you initiate the bucket creation process, where you’ll specify a unique name for your bucket and select the AWS region where it will reside. This setup allows you to effectively store and manage data in your chosen location.

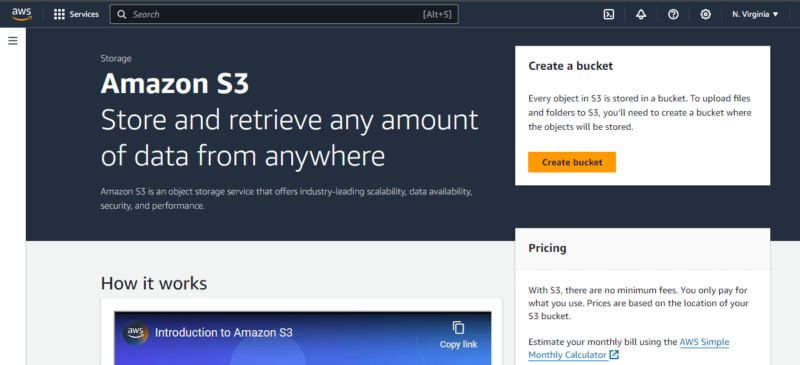

- Open the Management Console

Sign in to your AWS account, click on “services” at the top of the page and then select “storage” from the menu. From there, click on “S3“to manage your buckets and data. You can see a list of your existing buckets from the S3 dashboard. You can also create new buckets or manage objects within your current buckets.

- Start the “Create Bucket” Process

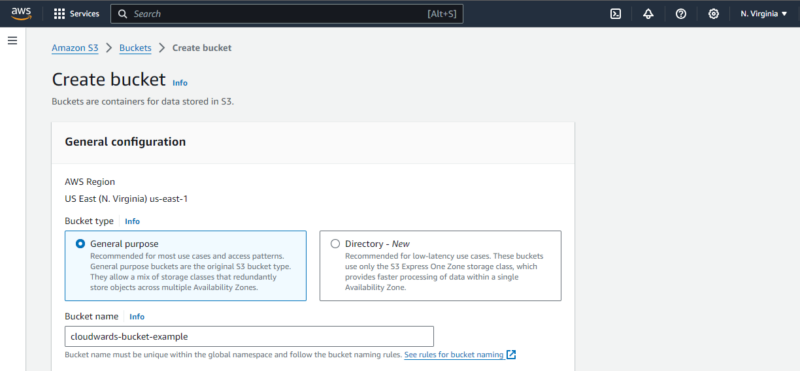

Click on “create bucket”to begin setting up your new bucket. A window will appear for you to enter the name and region for your bucket. After filling in those details, click “next“to continue configuring your bucket settings.

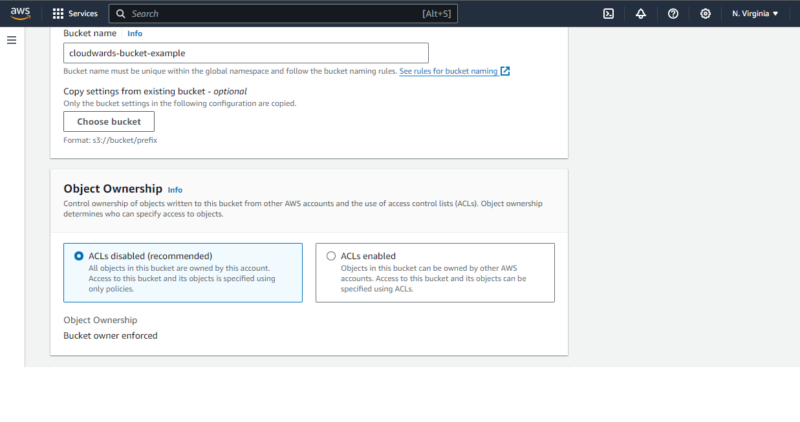

- Enter a Name for Your Bucket

Choose a name for your bucket. This name must be globally unique among all existing bucket names in Amazon S3. You can choose between two S3 bucket types: “general purpose,” which is good for many different uses and keeps data safe by storing it in multiple locations, and “directory – new,” which is designed for fast access by keeping data in just one location.

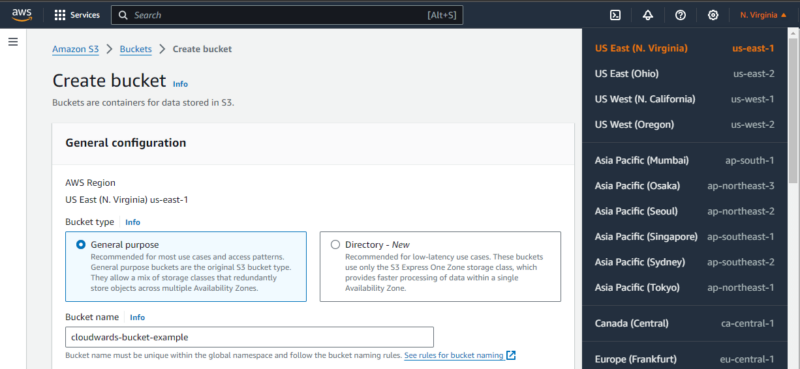

- Select Your Region

Choose an AWS region for your bucket. This should be close to where you or your users are located to speed up data access. Use the region selector in the top right corner to manage S3 buckets in different geographic areas. Selecting the right region helps reduce latency and improve performance. Also consider data compliance requirements in certain regions.

- Set Permissions

In the “object ownership” section of the S3 bucket settings, you can enable access control lists (ACLs) to grant specific permissions to users, including the bucket owner, other AWS accounts and public users.You can disable ACLs in favor of bucket policies for finer control over permissions. Adjust the settings to ensure only authorized users can access or modify data.

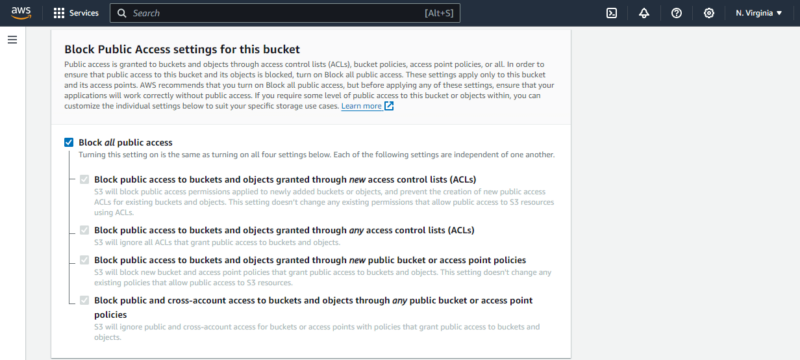

- Block Public Access (Optional)

Setting the bucket to “block all public access” secures your data by preventing public access to the bucket and its objects unless the specific use case requires it. This setting is highly recommended to prevent unauthorized access to sensitive data. Carefully review and adjust these settings to balance security and accessibility based on your needs.

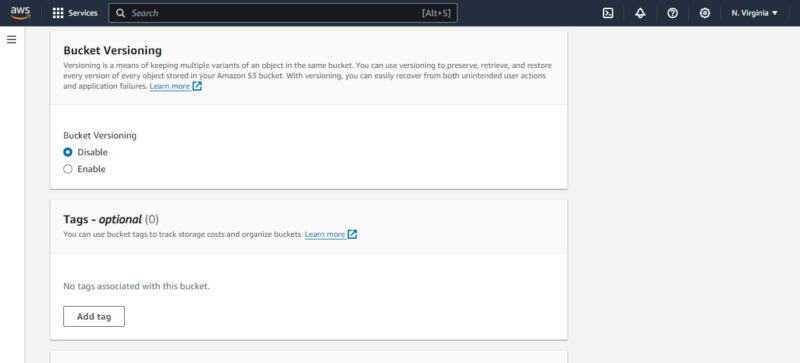

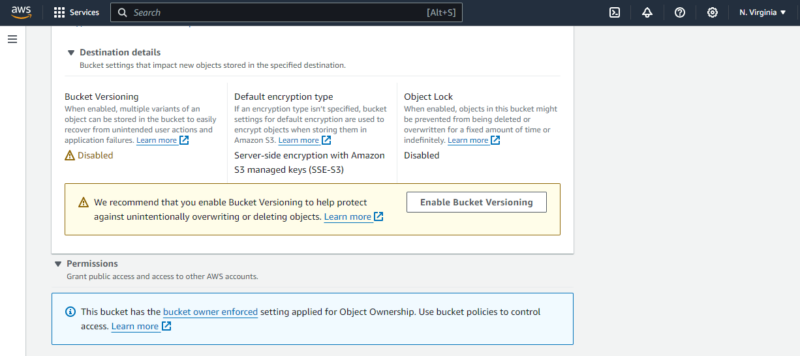

- Configure Other Options

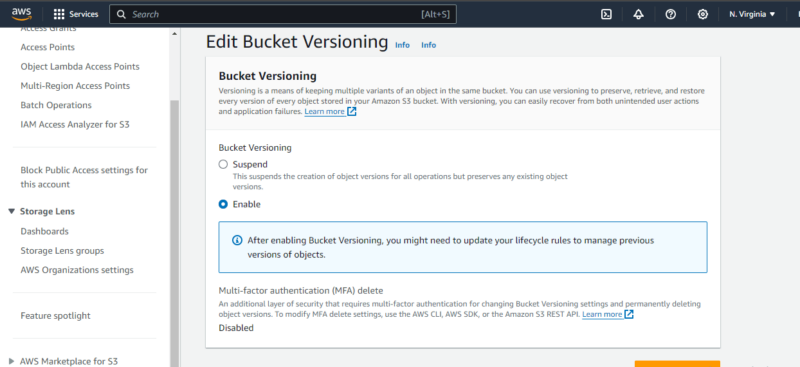

Other optional settings include bucket versioning and tags. You can choose to disable orenable bucket versioning or add tags with the “add tag” option. Versioning helps keep multiple versions of an object in the same bucket, which is useful for data recovery. Tags can help you organize and manage your buckets by assigning them key-value pairs.

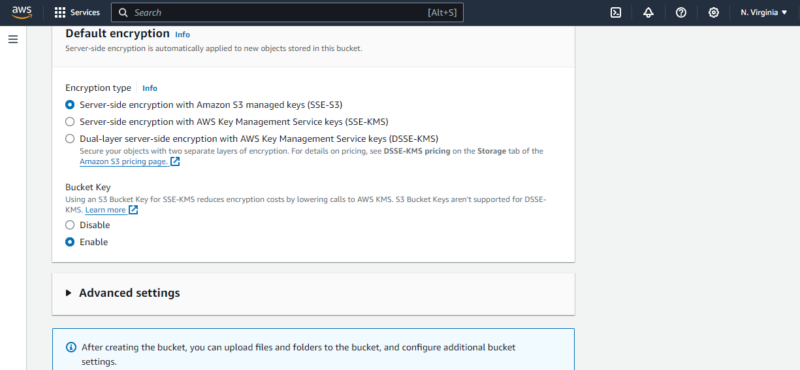

- Configure Encryption

Encryption options include using Amazon S3-managed keys or enabling AWS Key Management Service (KMS) to manage your keys. You can also lower encryption costs by enabling S3 Bucket Keys, and if needed, activate S3 Object Lock for added security.

- Review and Create

Check your settings, and if everything is correct, click “create bucket” to finalize the creation. Make sure all configurations meet your requirements before proceeding. S3 will create the bucket in just a few seconds. Once the bucket is created, you can start uploading and managing your data.

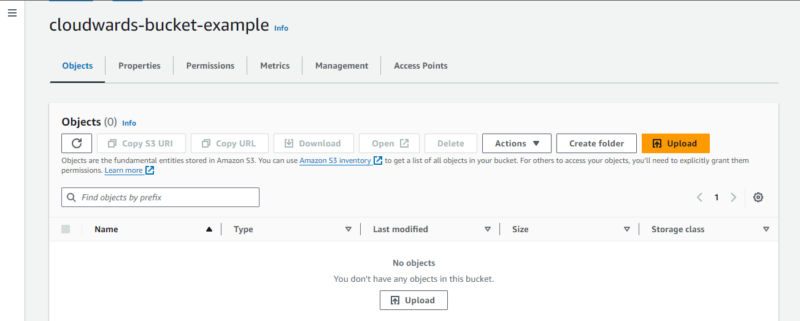

How to Upload Objects to the S3 Bucket

Uploading files to an Amazon S3 bucket is a straightforward process that involves selecting files on your computer and transferring them to your chosen bucket via the console. This method allows you to store any type of file securely in the cloud, making it accessible from anywhere. Follow these steps to quickly and easily upload your files.

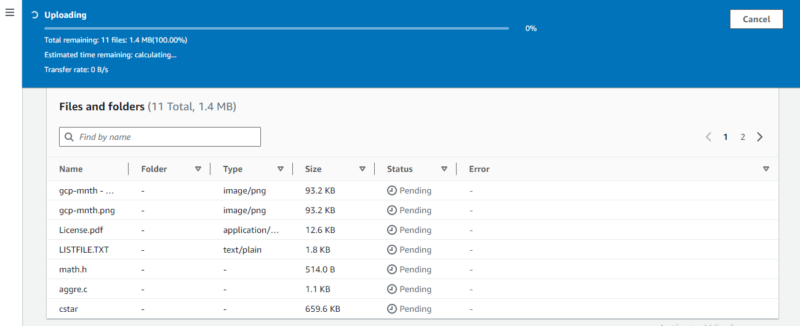

- Upload Files

Click on “upload” to start adding files. You can drag and drop files into the upload area or use the file selector to choose files on your computer. You can upload files of any type or even a whole folder.

- Configure File Options

Before finalizing the upload, you can enable bucket versioning, set access permissions and configure the encryption type and object lock. These settings help manage your files and keep them secure.

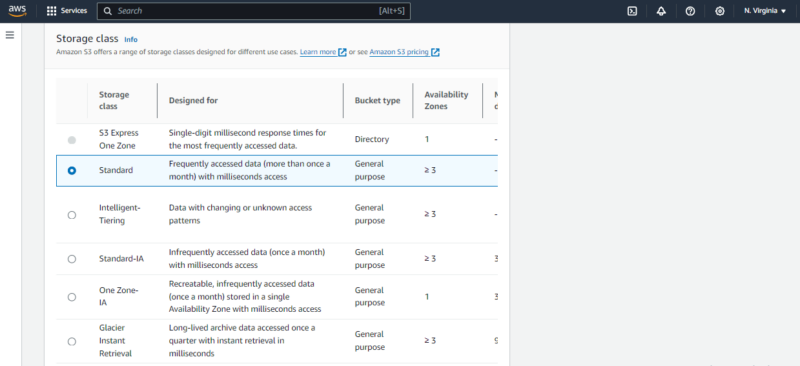

- Choose a Storage Class

Choose “standard” for data you access often or “glacier” for files you need to store but access infrequently.

- Start the Upload

After setting your file options, click “upload“to begin transferring your files to the S3 bucket. You can watch the upload progress in the console. When it finishes, your files will be stored in the bucket.

How to Move Data to Amazon S3

Moving data to Amazon S3 typically involves migrating data from existing systems, databases or other cloud computing services rather than just uploading from a local device. This process uses tools designed to handle larger datasets or more complex scenarios where data may be coming from various sources.

One common method for moving data to S3 is to use the AWS S3 command line interface (CLI). The CLI allows you to efficiently transfer files directly from your local system or other servers. Below, we go over the steps to follow when moving data to Amazon S3 using S3 CLI. However, there are some prerequisites to know about before we get started.

Prerequisites

- AWS account: You need an active AWS account to use the AWS CLI. If you don’t have one, create a free account at aws.amazon.com.

- AWS CLI installation:Download and install the AWS CLI on your local machine from the official AWS website. Follow the installation instructions for your operating system. In this example, we will be using Windows OS.

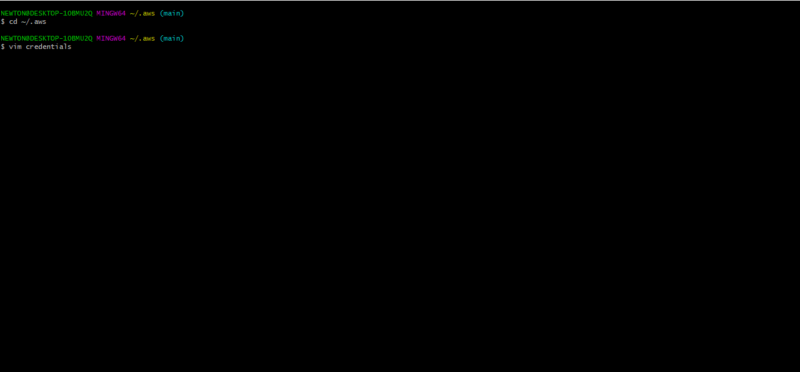

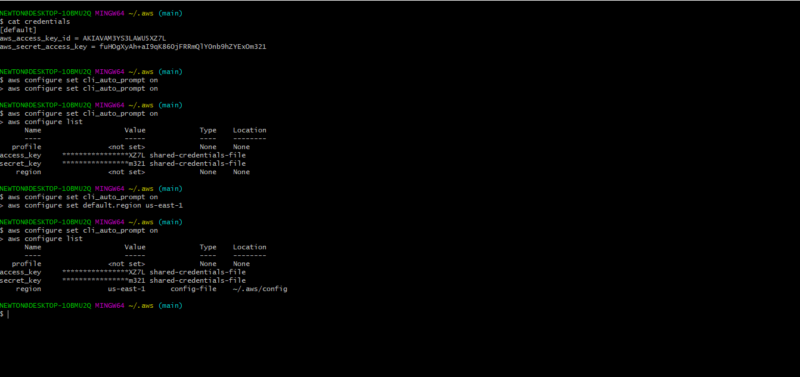

- Create Your AWS CLI Credentials

Set up your AWS CLI configuration by creating a file named “~/.aws/credentials” with your AWS access key ID and secret access key. To do so, open a terminal window or command prompt on your local machine. Use the command “cd ~/.aws” to navigate to the directory where the AWS CLI configuration files are stored. Use a text editor like nano, vim or notepad to create the file.

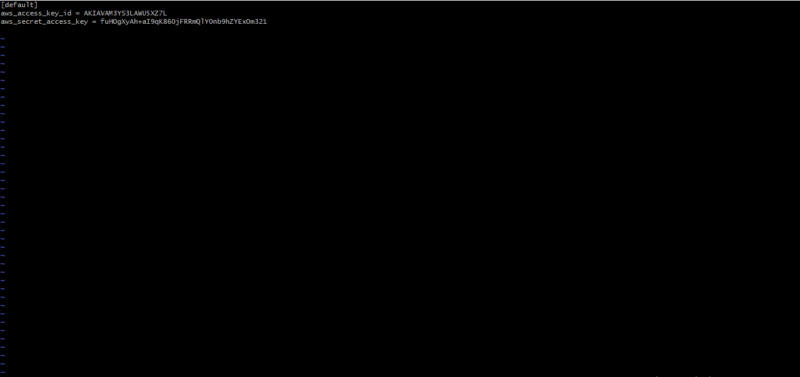

- Add Your AWS Access Key ID and Secret Access Key

In the text editor, add the following lines with your AWS credentials and save the file as follows:

“[default]aws_access_key_id = YOUR_ACCESS_KEY_ID

aws_secret_access_key = YOUR_SECRET_ACCESS_KEY”

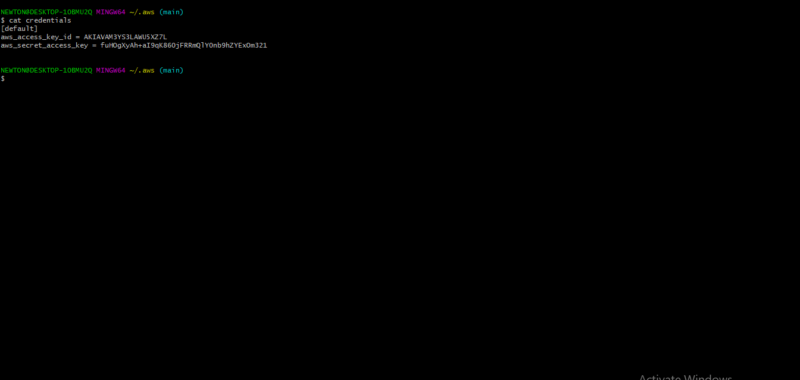

- Verify the Credentials

You can verify that the credentials file has been created and contains the correct information by opening it again in the text editor.

- Set the Default Region:

Set the default region for your AWS CLI commands by running the following command:

“aws configure set default.region

” To verify that your AWS CLI is configured correctly, you can run the following command in your terminal:

“aws configure list”

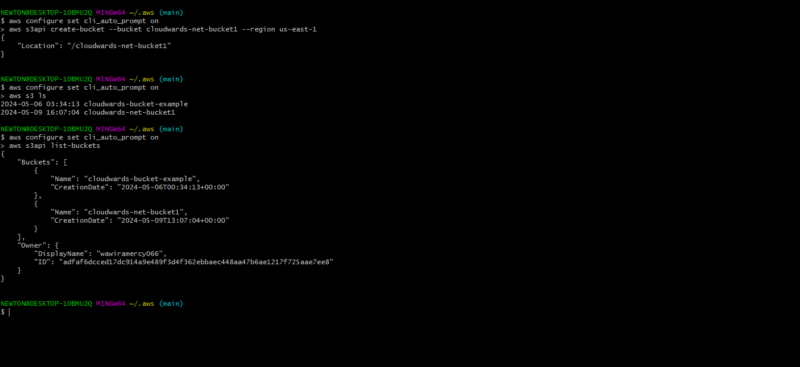

- Create a Bucket:

Use the following AWS CLI command to create a new S3 bucket:

“aws s3api create-bucket –bucket

–region ”To verify that the bucket has been created using the AWS CLI, you can use the “aws s3api list-buckets”and “aws s3 ls”commands.

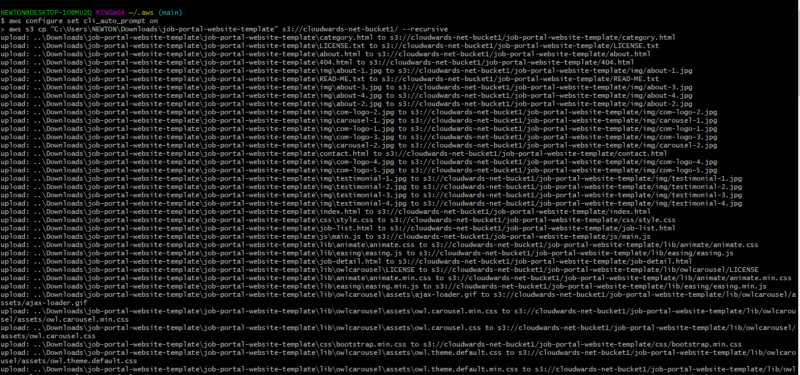

- Move Data to S3

Now that your bucket is set up, it’s time to move your data.Use the “aws s3 cp” command to move files from your local machine to the S3 bucket. If you want to copy multiple files or all files within a directory recursively, you can add the “–recursive” option to the command.

This option is useful when copying multiple files or directories to an S3 bucket.

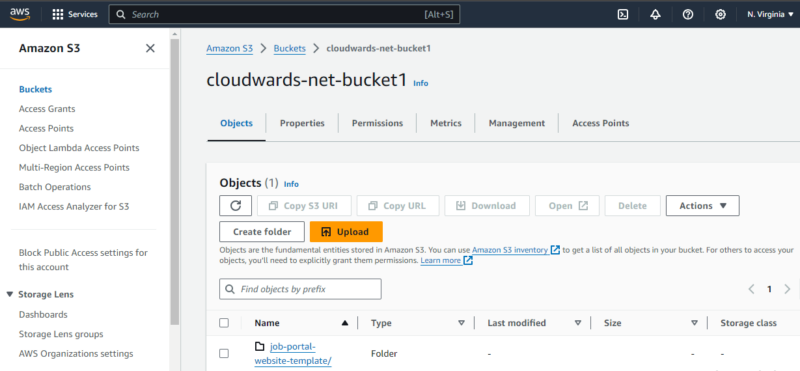

- Verify Data Migration in the Console

To verify your data has been moved to Amazon S3, go to the console and select your bucket. Use the “aws s3 ls” command to list the contents of your S3 bucket. Check the list of objects to ensure your files are there. You can also review the timestamps to confirm the data was recently uploaded.

How to Protect and Secure Data in AWS S3

To secure data in Amazon S3, you need to implement access controls, encryption and monitoring strategies to prevent unauthorized access. Use AWS tools such as bucket policies, user permissions, encryption services and logging mechanisms to enhance security.

The importance of these security measures is highlighted by events such as the 2019 incident in which Capital One’s poorly configured S3 bucket exposed private information from 100 million credit card applications, leading to an $80 million fine.

We will now discuss the methods and steps required to effectively protect and secure your data in Amazon S3.

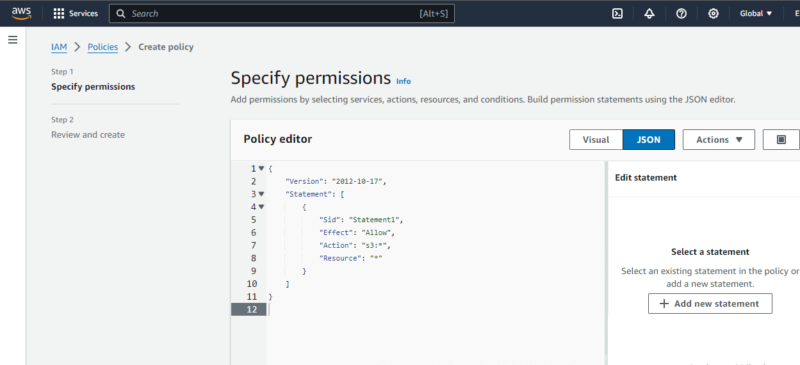

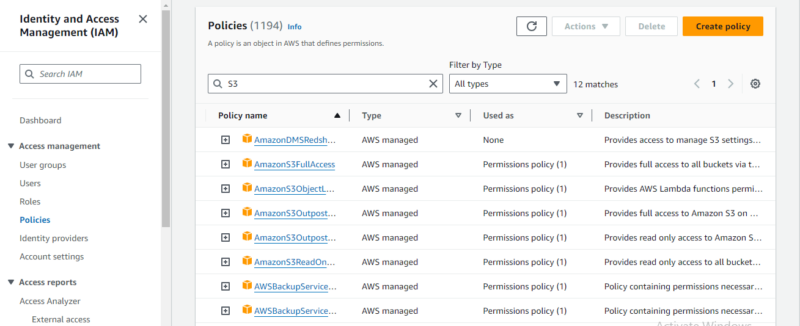

- Create IAM Policies

To control access to your S3 resources, start by creating IAM policies in the AWS console. Go to the IAM service, click “policies” and select “create policy.” Use the visual or JSON editor to define the necessary permissions; then, create and assign the policy to users or groups.

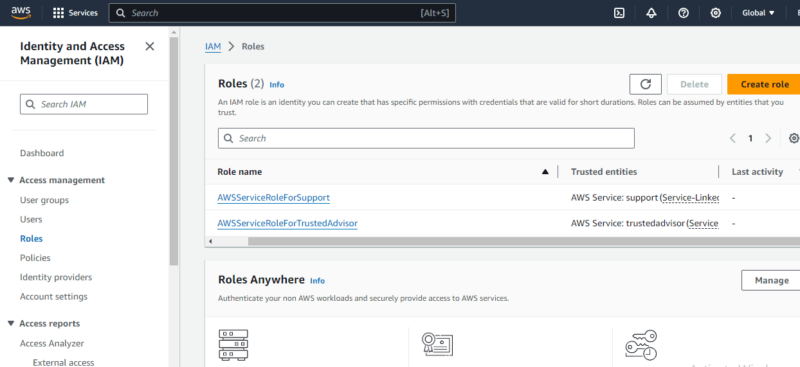

- Create IAM Roles

Create IAM roles for applications that need S3 access. In the IAM console, click “roles” and then “create role.” Choose the AWS service, such as Amazon S3; attach the necessary policies; and create the role. Link this role to your S3 bucket or other services for temporary access.

- Create Bucket Policies

Go to the “permissions“tab in your S3 bucket settings to create bucket policies. Select your bucket in the S3 console and click “permissions“and then”bucket policy.” Write a JSON policy to define who can access the bucket and the actions they can perform. Save the policy to apply the changes.

- Enable Encryption for Data at Rest

To ensure your S3 bucket files are encrypted, start by enabling server-side encryption in the AWS console. Open your bucket, go to the “properties” tab and under”default encryption,” select the type of encryption you want. Choose “SSE-S3” for standard encryption with Amazon-managed keys or “SSE-KMS” for enhanced security through AWS Key Management Service. You can also provide your own encryptionkey by selecting “SSE-C.”

- Enable Versioning

Select your bucket in the S3 console, go to the “properties” tab and turn on the “versioning” setting to keep multiple versions of each object.

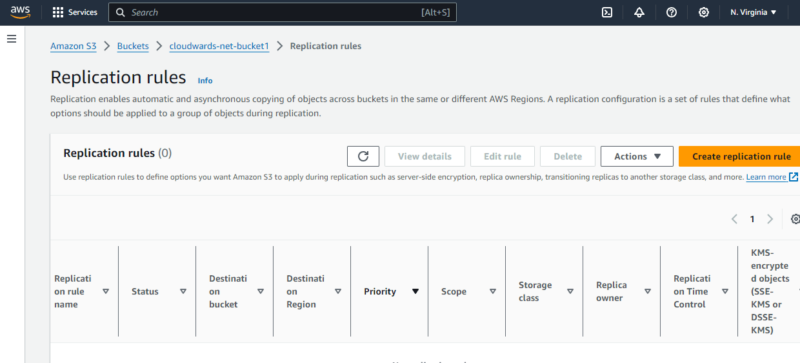

- Set up S3 Replication

For additional protection, set up S3 replication by navigating to the”management” tab in your bucket settings. Here, you can create a replication rule to specify the source and destination buckets and decide whether to replicate all objects or only certain ones.

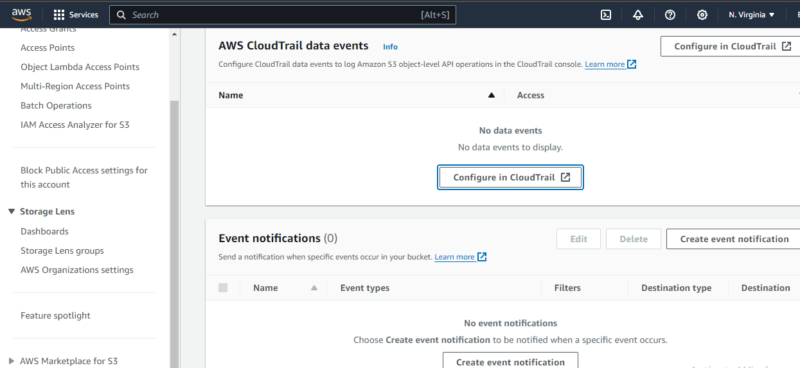

- Monitor and Log Access Requests

Set up CloudTrail in the AWS management console to record who accesses your S3 data and all related activity. For more specific logging, go to the “properties“tab in your S3 bucket, turn on server access logging and choose where to save the logs.

Amazon S3 Main Use Cases

The main use cases of Amazon S3 include data backup and archiving, content distribution and hosting, disaster recovery, big data analytics, and software and object storage. Amazon S3 is also utilized for IoT devices, enterprise application storage and providing the underlying storage layer for data lakes in AWS.

- Data backup and archiving: People use Amazon S3 to securely store their data backups and archival files. This helps keep important information safe and easily retrievable.

- Content distribution and hosting: Many websites and services use S3 to host and distribute content such as videos, images and application data, ensuring fast access for users worldwide.

- Disaster recovery: S3 provides a reliable solution for disaster recovery plans by storing copies of critical data in multiple locations. This redundancy helps businesses quickly recover from system failures.

- Big data analytics: S3 supports big data analytics by offering a robust and scalable storage solution for analyzing large datasets, helping organizations gain insights from their data.

- Software and object storage: Developers can store application-related data and objects in S3 and benefit from its durability and scalability.

- IoT devices: For IoT applications, S3 stores and manages data generated by numerous devices, facilitating efficient data processing and analysis.

- Enterprise application storage: Enterprises rely on S3 to store application data, benefiting from its high availability and security features.

- Data lakes: S3 provides the foundational storage layer for data lakes in AWS, allowing users to collect, store and analyze vast amounts of data from various sources.

Amazon S3 Pricing Plans

Amazon S3 pricing is based on a pay-as-you-go model, so you only pay for the storage you use. The cost per gigabyte varies based on factors like the storage class, region and volume of data stored. This pricing structure helps efficiently manage expenses. It’s important to monitor your usage to avoid unexpected costs.

The default S3 Standard storage class costs $0.023 per GB for the first 50 TB per month, $0.022 per GB for the next 450 TB per month and $0.021 per GB for anything over 500 TB per month.

Other storage classes like S3 Intelligent-Tiering, S3 Standard-Infrequent Access and S3 Glacier have lower costs per gigabyte but higher data retrieval costs. The location of your S3 buckets, specifically the region and availability zones, can also impact pricing. Pricing may differ across regions, so choose the most cost-effective region based on your data access patterns.

Finally, the volume of stored data plays a role, as the cost per gigabyte typically decreases as the amount of total data stored increases. If you’re curious about the specific costs associated with using Amazon S3, you can use the AWS pricing calculator to evaluate your use case.

Is AWS PCI DSS-Compliant?

Amazon Web Services (AWS), including Amazon S3, meets the Level 1 Payment Card Industry Data Security Standard (PCI DSS). This means that AWS follows strict security rules to keep credit card information safe. AWS is checked regularly to make sure it stays compliant, guaranteeing a secure setup for handling credit card data.

What Are the Main Differences Between Amazon S3, EBS and EFS?

Amazon S3, EBS (Elastic Block Store) and EFS (Elastic File System) each provide different types of storage on AWS. Each type of storage is best for different uses based on how they handle data.

S3 is good for storing lots of data that you need to be able to access from anywhere, making it great for backing up data or hosting websites. EBS is used for storage that is directly attached to servers running on AWS, so it is ideal for databases or applications that need quick access to data.

Finally, EFS is for storage that multiple servers can use at the same time, which is useful for cases in which different servers need to share files easily, such as for websites that handle a lot of user data.

Amazon S3 Cloud Storage Alternatives

Alternatives to Amazon S3 include Google Cloud Storage, Microsoft Azure Blob Storage, DigitalOcean Spaces and IBM Cloud Object Storage.

Final Thoughts

We hope this guide has helped you understand Amazon S3 and what it offers for cloud storage. We’ve looked at its main features, how it keeps data safe and how it compares to other services like Google Cloud Storage and Microsoft Azure Blob. Amazon S3 is great for keeping data secure, backing up large amounts of information or running business applications on the cloud.

Do you use Amazon S3 for your storage needs, or do you prefer another service? What do you think about Amazon S3’s security features? Would you use multiple cloud storage services to better manage your data? Let us know in the comments below, and thank you for reading!

FAQ: Amazon S3

-

Amazon S3 is a highly scalable object storage service primarily used for data backup, content delivery, and storing and retrieving large amounts of data.

-

No, Amazon S3 is not the same as Google Drive. S3 is an enterprise-grade object storage service for large-scale data storage, while Google Drive is more consumer-oriented, designed for storing individual files and folders that multiple users can share and access. The Google equivalent to Amazon S3 is called Google Cloud Storage.

-

Amazon S3, or Amazon Simple Storage Service, is a cloud storage service provided by Amazon Web Services (AWS) that offers object storage through a web service interface.

-

AWS (Amazon Web Services) is a comprehensive cloud computing platform offering a wide range of services, including S3 (Simple Storage Service), which is a specific service within AWS focused on object storage.