Security Risks and Challenges with AI Copilots

October 16, 2024

By Sekhar Sarukkai – Cybersecurity@UC Berkeley

In the previous blog, we explored the security challenges tied to Foundational AI, the critical first layer that supports all AI models. We examined risks like prompt engineering attacks, data leakage, and misconfigured environments. As AI technology advances, it is crucial to secure each layer to prevent emerging vulnerabilities.

In this blog, we’ll dive into the security risks associated with Layer 2: AI Copilots. These AI-driven virtual assistants are rapidly being deployed across industries to automate certain tasks, support decision-making, and enhance productivity. While AI Copilots offer powerful benefits, they also introduce new security challenges that organizations must address to ensure safe and secure use.

Security Risks at the AI Copilot Layer

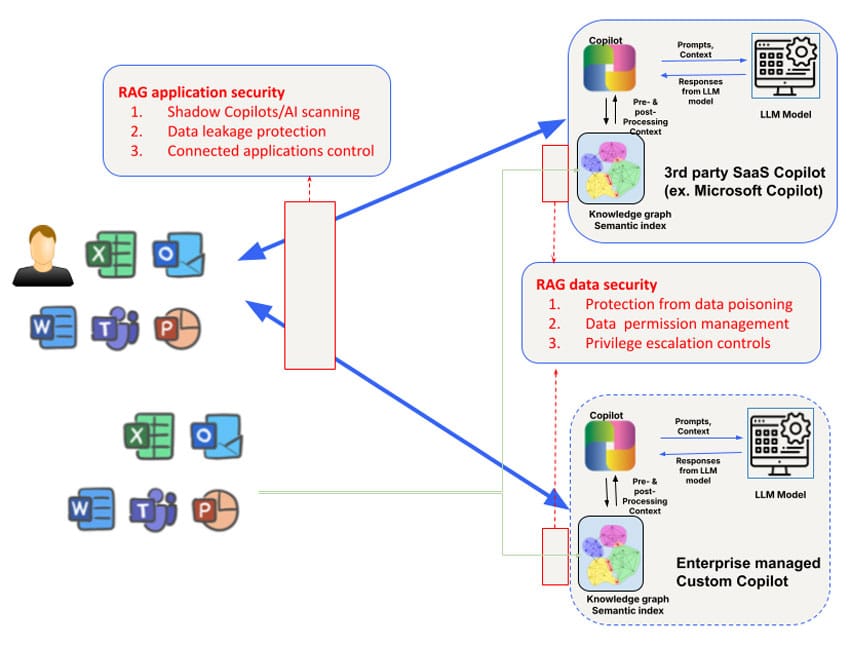

Security risks differ for third-party AI Copilots used by employees (such as Microsoft Copilot) and custom AI Copilots developed by business teams that are customer- or partner-facing. For example, access to and manipulation of the knowledge graph of third-party AI Copilots is limited and so is the control of what data is used to train the model itself. Nevertheless, security concerns addressed at Layer 1 need to be augmented with a focus on three areas: data, context, and permissions to address broader Copilot security needs.

1. Data Poisoning and Model Manipulation

AI Copilots rely on large volumes of data to generate responses and assist users with complex tasks. However, this heavy dependency on data introduces the risk of data poisoning, where attackers manipulate input data to distort the model’s outputs. This can result in AI Copilots providing incorrect suggestions, making flawed decisions, or even executing harmful actions based on the poisoned data.

A bad actor could alter training data used to fine-tune the models powering AI Copilots, causing the system to behave unpredictably or favor certain outcomes. For example, earlier this year, researchers sampled Hugging Face and discovered more than 100 models were malicious. In environments where AI Copilots are used for critical tasks—like financial analysis or customer support—this can have far-reaching consequences. This vulnerability could potentially expose an organization to external threats, leading to both financial and reputational damage.

Mitigation Tip: To reduce the risk of data poisoning, organizations should implement strict data validation mechanisms and monitor the integrity of all data used to train or update AI models. Regularly scheduled audits and employing data lineage tools to track the flow of data into AI Copilot systems can help identify potential vulnerabilities.

2. Contextual Risks and Supply Chain Attacks

AI Copilots often leverage contextual data, such as user behavior, specific company information, or real-time data feeds, to tailor their responses. However, this reliance on context introduces the risk of context poisoning, where attackers manipulate the contextual data to alter the system’s behavior.

For example, Microsoft’s Copilot system creates rich semantic indices for enterprise data—including emails, documents, and chats—to provide accurate and relevant responses. However, this context can be exploited for targeted attacks. By injecting malicious data or altering the underlying context, attackers could cause the AI Copilot to generate misleading outputs, inadvertently share sensitive information, conduct spear phishing attacks, or take other harmful actions. Another form of data supply chain poisoning in the Microsoft Copilot context can be carried out by simply sharing a malicious file with the target user. This attack will work even if the user does not accept the share and is not even aware of this shared malicious file.

Mitigation Tip: Organizations are advised to establish clear rules for how contextual data is used and accessed by AI Copilots. This includes limiting the types of data that can be included in AI Copilot training, enforcing access controls, and regularly auditing AI outputs for signs of manipulation. Red teaming (simulated attacks) can also help identify weaknesses in AI Copilot systems and their contextual data processing.

3. Misuse of Permissions and Escalation

A major risk associated with AI Copilots is misuse of permissions. These systems often require access to multiple data sets, applications, or even company infrastructure to perform tasks efficiently. If not properly managed, AI Copilots could be granted excessive permissions, allowing them to access sensitive data or perform actions beyond their intended scope.

This poses a risk of escalation attacks, where attackers exploit the AI Copilot’s permissions to gain access to unauthorized systems or confidential information. In some cases, misconfigured permissions may allow AI Copilots to perform actions like changing account settings, deleting important files, or exposing sensitive data to third-party applications.

For instance, GitHub’s Copilot has been identified as a risk due to its integration with third-party plugins that could lead to data leaks if those plugins are not properly vetted. A study conducted by Cornell University revealed that approximately 40% of programs generated using GitHub’s Copilot contained vulnerabilities, emphasizing the real-world risks of granting AI systems excessive permissions without strict oversight.

Mitigation Tip: Best practices for managing AI Copilot permissions include applying the principle of least privilege (granting only the minimum permissions necessary for AI Copilots to perform their tasks) and regularly reviewing access logs. Automated tools that detect and flag anomalous behavior should also be employed to mitigate risks from unauthorized access or privilege escalation.

4. Rogue AI Copilots and Shadow AI

As AI Copilots proliferate, organizations may face the threat of rogue AI Copilots or shadow AI (unauthorized AI applications deployed without oversight). These shadow systems can operate outside of the organization’s formal security protocols, leading to significant risks, including data breaches, compliance violations, and operational failures.

Rogue AI Copilots can be created by well-meaning employees using open-source AI models or by malicious insiders seeking to bypass company controls. These unsanctioned systems often go unnoticed until a security breach or compliance audit reveals their existence.

The rise of shadow AI has been likened to the early days of shadow IT, when unsanctioned cloud services and applications were commonly used by employees without corporate approval. Similarly, shadow AI introduces the risk of unknown vulnerabilities lurking within an organization’s infrastructure, with no formal security measures to protect against misuse.

Mitigation Tip: To combat the rise of rogue AI Copilots, organizations need to maintain strict oversight of all AI deployments. Implementing AI governance frameworks can help ensure that only authorized AI Copilots are deployed, while continuous monitoring and automated discovery tools can detect unauthorized AI applications running within the organization.

Securing AI Copilots: Best Practices

The above risks can be addressed based on the use cases. For use of external third-party AI Copilots (such as Microsoft Copilot or Github Copilot), the first requirement is to get an understanding of shadow AI Copilot/AI usage via discovery. Skyhigh’s extended registry of AI risk attributes can be a handy way to discover third-party AI Copilots used by enterprises. Data protection at this layer is best addressed with a combination of network-based and API-based data security. Inline controls can be either applied via Secure Service Edge (SSE) forward proxies (for example, for file upload to AI Copilots) and application/LLM reverse proxies for home-grown AI Copilots/chatbots, or browser-based controls for application interactions that use WebSockets.

Another risk centers around other applications that can connect to AI Copilots. For example, Microsoft Copilots can be configured to connect to Salesforce bidirectionally. The ability to query Microsoft Copilot configurations to gain visibility into such connected applications will be essential to block this vector of leakage.

In addition to the AI Copilot application-level controls, special consideration needs to also be given to the retrieval augmented generation (RAG) knowledge graph and indices that are used by AI Copilots. Third-party AI Copilots are opaque in this regard. Hence organizations will be dependent on API support by AI Copilots to introspect and set policies of the vector/graph stores used. In particular, a challenging problem to address at this layer centers around permission management at the data level as well as privilege escalations that may inadvertently arise for AI Copilot-created content. Many AI Copilots do not yet have robust API support for inline/real-time control further compounding the challenge. Finally, APIs can be used for near real-time protection and on-demand scanning of the knowledge base for data controls as well as scanning for data poisoning attacks.

To protect against the security risks associated with AI Copilots, organizations should adopt a multi-layered approach to security. Key measures include:

- Conduct regular audits: Regularly review AI Copilot deployments for data poisoning vulnerabilities, context manipulation risks, and permission misconfigurations.

- Implement permission controls: Use least-privilege access models to ensure AI Copilots only have access to the data and systems they need.

- Deploy automated monitoring tools: Implement automated tools to monitor AI Copilot behavior and detect anomalies, such as unauthorized access or suspicious data interactions.

- Govern AI usage: Establish clear governance frameworks to oversee AI deployments, prevent rogue AI Copilots, and ensure compliance with security and privacy regulations.

By adopting these best practices, organizations can maximize the benefits of AI Copilots while minimizing the security risks.

The Path Forward: Securing AI Layers

AI Copilots offer significant potential for boosting productivity and improving business outcomes, but they also bring new security risks to the table. From data poisoning to rogue AI Copilots, organizations must take proactive steps to secure this critical layer of AI technology. As we move to more advanced layers of AI, the need for robust security measures only grows.

Stay tuned for the next blog in our series, where we’ll explore Layer 3 and the security challenges that come with other autonomous AI systems. To learn more about securing your AI applications, explore Skyhigh AI solutions.

Other Blogs In This Series: